Research talk 8 - Explain and answer - Intelligent systems which communicate about what they see

Marcus Rohrbach is a PostDoc at UC Berkley and his work has won the best paper award in NAACL 2016. In this lecture, he provides a very exciting combination of state-of-the-art Computer Vision and Natural Language Processing. We have been quite familiar with another CV-NLP task in which to generate descriptive caption for a image. In this work, instead of generating descriptive text, their system could answer questions with regard to a given image. ���������������������������������������������������������������������������������������������������������������������������������������

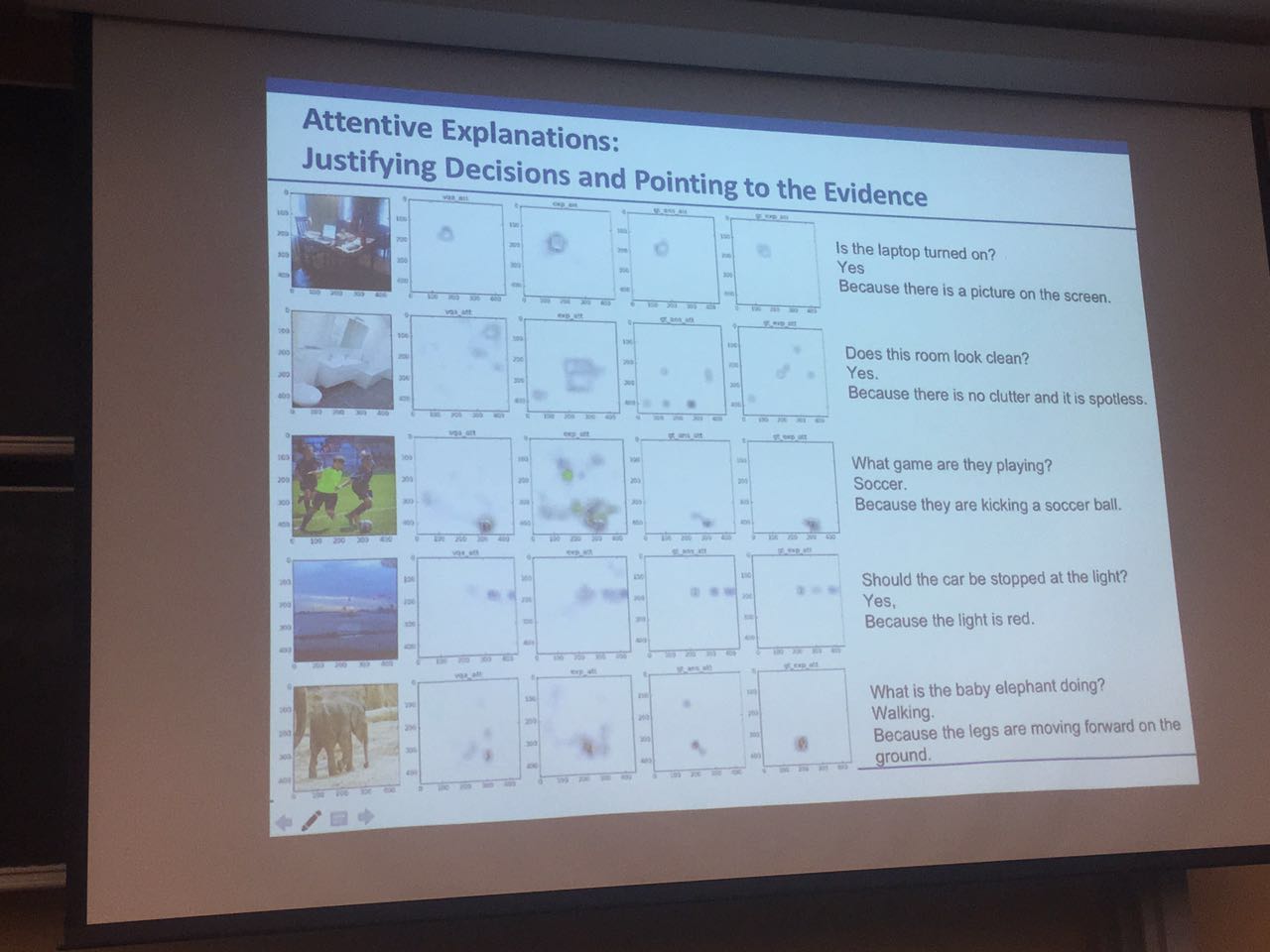

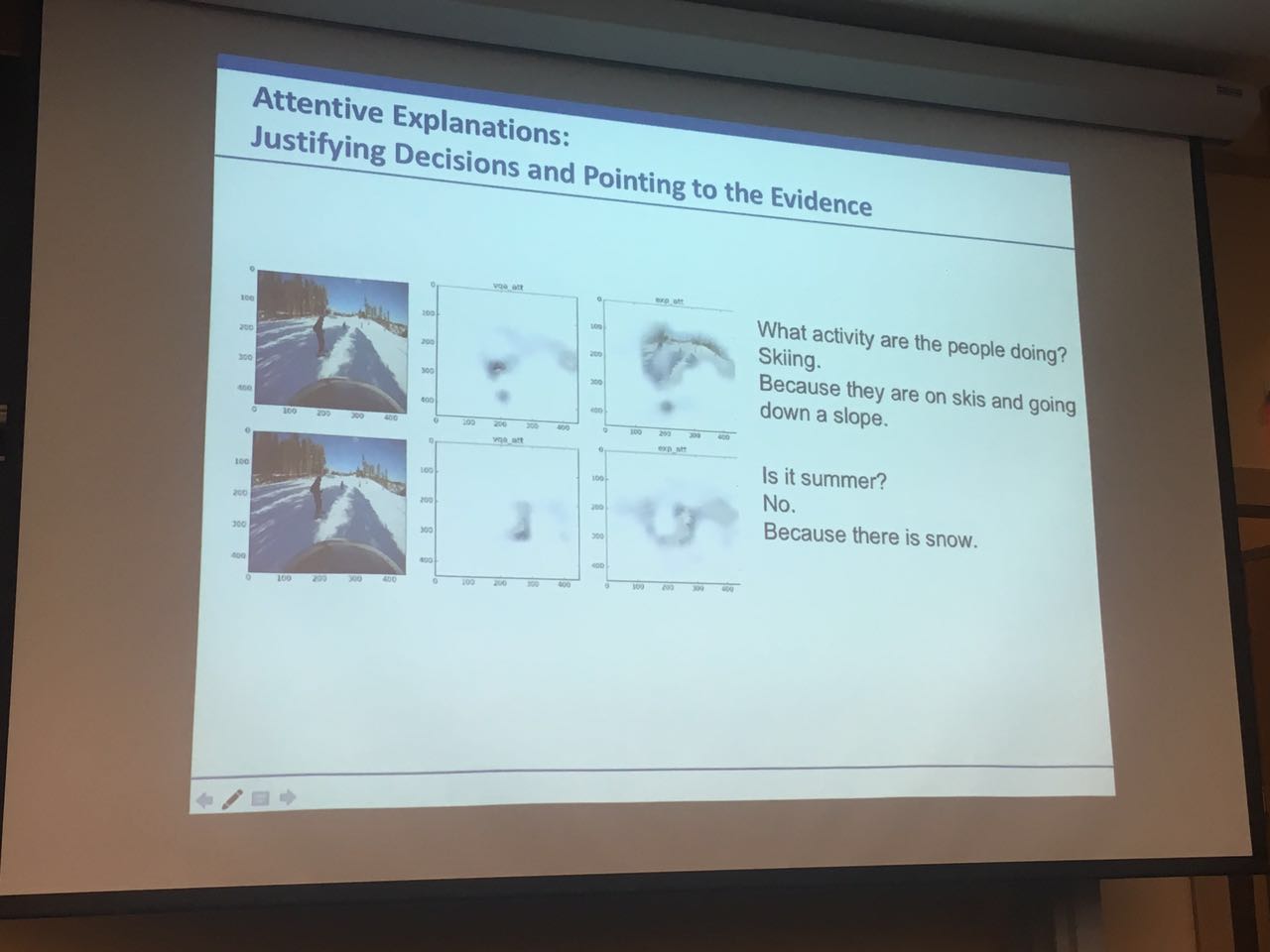

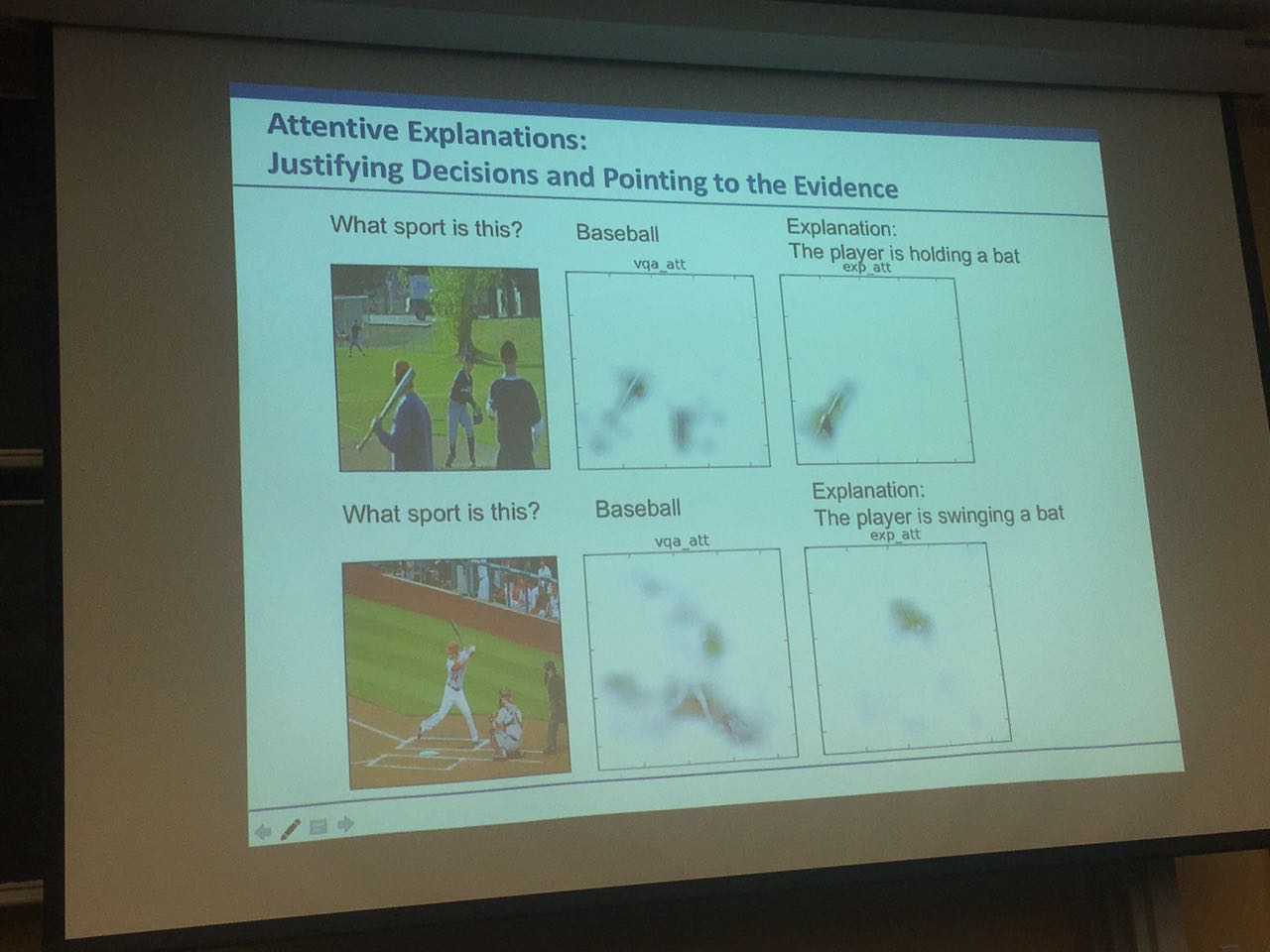

In real life, the most information is gained by interacting with the visual world. And language is the most important channel for humans to communicate about what they see. However for now the language and vision are only loosely connected. Thanks to the recent development on computer vision and natural language processing, we are able to achieve a better integration on these two parts. Marcus’s work is mainly focused on two components. One component in a successful communication is the ability to answer natural language questions about the visual world. A second component is the ability of the system to explain in natural language, why it gave a certain answer, allowing a human to trust and understand it.

Basically, the vision part is implemented by Convolutional Neural Networks and language is processed by Recurrent Neural Networks. They build models which answer questions, and at the same time the system would highlight the semantically relevant parts on image, which exposes their semantic reasoning structure. Besides, a number of tasks are accomplished, e.g. video caption generation, automatic role matching.