About

I am Rui Meng (孟睿), currently a research scientist at Salesforce Research. My research focuses on Natural Language Processing and Deep Learning. Particularly, I am interested in Representation Learning and Language Grounding.

I obtained my Ph.D. from the School of Computing and Information, University of Pittsburgh and I was advised by Prof. Daqing He and Prof. Peter Brusilovsky. Prior to my Ph.D. life at Pitt, I received my bachelor and master degree at Wuhan University, and I was advised by Prof. Wei Lu. I interned at Google Research (2018, 2020), Salesforce Research (2019) and Yahoo! Research (2017).

Check out my resume for more information.

Recent Posts

关于语言本质的一些想法

读了一篇文章,记下一些随想,欢迎交流。

原文链接:

英文版:

Real talk

中文版:与生俱来还是后天习得?人类如何获得语言能力?

原本语言只需要声音(sound)就足够了,当然动作和上下文环境也是helpful。Khoisan 语言有多达144种发音,但是相比于文字的数量实在少得可怜。但是通过相对简单的元素加上足够的组合和排序,这样的语言至少满足了局部交流的需求。可惜人类无法通过声音保存信息以应对跨时空的信息交流的需求。于是有了文字,用来对声音进行encode,同时也导致了一些歧义出现(如同音不同形)。

显然物理世界对语言的影响不至于保存媒介,我感觉影响最大的一点是语言只能通过序列的形式存在。具体而言就是,时间导致语言只能按照从前到后的形式出现,因为人在同一时间只能发出一个声音,不可能以二维甚至多维的形式传递信息,比如通过嘴在空气中说出一幅画或者一个物体 :D 否则可能就会产生别的更好玩的语言形式了。这可能也是为什么RNN这种更关注序列的model比CNN对语言的建模能力更强(瞎说的,但欢迎拍砖)。

A Brief Review of Neural Network on Spoken Language Understanding

One project requires to do keyphrase extraction on scientific text. As most keyphrases appear in the text, so I am considering that whether this problem can be framed as a sequence labeling task , just like NER and POS-tagging.

Recently I come across a few papers about Neural Network applications on slot filling task, a subtask of spoken language understanding. Similarly, this task also can be addressed as a a standard sequence labeling task. So I hope I can get inspired somehow from their research, and the following is some notes about these papers. It’s worth noting that this posting doesn’t cover all the Neural Network research regarding the slot filling task, mostly from MSR.

1. Task Introduction: Slot Filling and Recurrent Neural Network

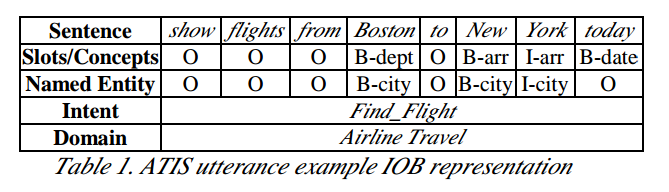

A little bit of description of the slot filling task as well as the data would help you understand what’s going on here. The figure below shows an example in ATIS dataset, with the annotation of slot/concept, named entity, intent as well as domain. The latter two annotations are for the other two tasks in SLU: domain detection and intent determination. We can see that the slot filling is quite similar to the NER task, following the IOB tagging representation, except for a more specific granularity.

An example of IOB representation for ATIS dataset

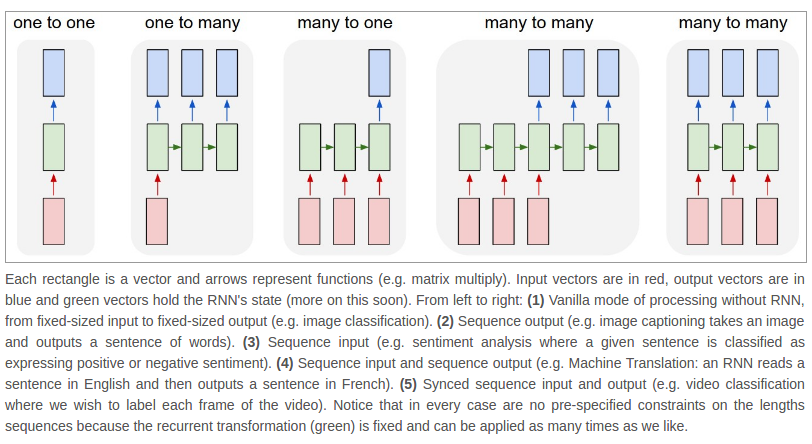

So our task is to translate the original sentence into the IOB tagging form. Following the RNN structures shown below, the model structure we used for sequence labeling should be the last one, which outputs a label for each input word. There are many awesome articles can offer you intuitive understanding and techniques about RNN, so I won’t refer to much detail here.

Some common architectures of recurrent neural networks

2. Paper Notes

2.1 Investigation of Recurrent-Neural-Network Architectures and Learning Methods for Spoken Language Understanding