A Brief Review of Neural Network on Spoken Language Understanding

One project requires to do keyphrase extraction on scientific text. As most keyphrases appear in the text, so I am considering that whether this problem can be framed as a sequence labeling task , just like NER and POS-tagging.

Recently I come across a few papers about Neural Network applications on slot filling task, a subtask of spoken language understanding. Similarly, this task also can be addressed as a a standard sequence labeling task. So I hope I can get inspired somehow from their research, and the following is some notes about these papers. It’s worth noting that this posting doesn’t cover all the Neural Network research regarding the slot filling task, mostly from MSR.

1. Task Introduction: Slot Filling and Recurrent Neural Network

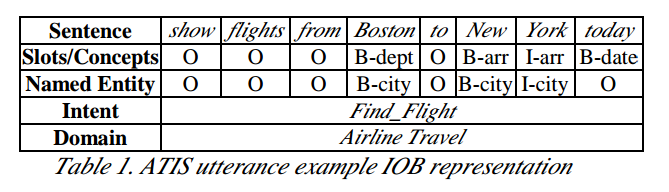

A little bit of description of the slot filling task as well as the data would help you understand what’s going on here. The figure below shows an example in ATIS dataset, with the annotation of slot/concept, named entity, intent as well as domain. The latter two annotations are for the other two tasks in SLU: domain detection and intent determination. We can see that the slot filling is quite similar to the NER task, following the IOB tagging representation, except for a more specific granularity.

An example of IOB representation for ATIS dataset

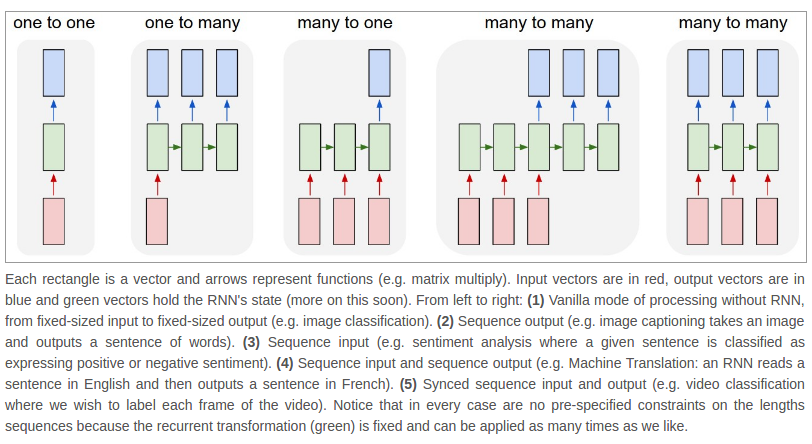

So our task is to translate the original sentence into the IOB tagging form. Following the RNN structures shown below, the model structure we used for sequence labeling should be the last one, which outputs a label for each input word. There are many awesome articles can offer you intuitive understanding and techniques about RNN, so I won’t refer to much detail here.

Some common architectures of recurrent neural networks

2. Paper Notes

2.1 Investigation of Recurrent-Neural-Network Architectures and Learning Methods for Spoken Language Understanding